"Why does my RAG suck and how do I make it good?"

The real answer for any AI company or team asking this question

In my consulting work with several cool AI companies, I noticed there's one question that seemingly everyone is asking:

“Why does my RAG suck and how do I make it good?”

I hear this question so much I decided to write a short article answering it. Hopefully some of you will find it helpful. After all, effective Retrieval Augmented Generation can make or break a product (or a company).

Identifying the Problem

The first step is to change how you identify and approach problems. Too often, teams use vague terms like “it feels like” or “it seems like” instead of specific metrics, like “the feedback score for this type of request improved by 20%.”

Teams blindly rush to swap in different models or hope that implementing elastic search will save them. Instead, there are quantitative metrics for precision, recall, and accuracy (let alone user satisfaction) that you should be collecting and incorporating into decision-making.

There are many great RAG libraries, tools, and pipelines out there, but you don’t know what will work for your own data and your own use-case.

Road To Production (Skip this part if you’ve already launched)

You’re not going to have real user data before you go into production. So you should start by making a plausible RAG evaluation dataset by mimicking what you expect real users to ask — you don’t need much, a set of one hundred questions will be good enough. To complement this set of questions you’ll need the following:

Retriever ground truth: relevant passages (aka context) for answering questions.

Answer ground truth: the correct answer.

If you’re feeling lazy you can use OpenAI’s GPT 4 or Anthropic’s Sonnet to help you generate the questions.

Then, there are AutoML tools out there like AutoRAG that you can use to optimize your pre-production RAG using this small dataset.

Under the hood, these tools use metrics like recall, precision, and F1 to evaluate the quality of retrieval — and metrics like METEOR, BLEU, ROUGE, or BERTScore (among others) to evaluate the quality of generated answers.

AutoML tools use a trial & error approach to find the best combination of components and parameters. The process involves iterating through different modules and parameter configurations to find the optimum combination. Each step of the pipeline is evaluated using the aforementioned metrics.

The end result is an optimized RAG pipeline with a retrieval mechanism, specific LLM, and enhanced system prompts. Now you’re ready to start testing with real users.

Launch and Observability

Once you launch your product, your main challenge will be effective observability. By this point you will probably have a RAG pipeline that has

Questions being asked

Vector embeddings search for retrieval

Extra points if you also have traditional text search as well

This is where you realize your RAG sucks, but you have no idea how to make it good. And the reason you have no idea is because you have no observability. This can easily be solved by collecting and analyzing information for every response you generate:

Thumbs up/down feedback

Important to ask users the right thing when asking for feedback

Instead of asking a vague question like “how did we do”, ask “did we answer your question”.

Even better if you have checkboxes to categorize feedback.

Reranker score for your retrieved context

I personally like Cohere’s reranker: https://cohere.com/rerank

A reranker reorders your retrieved context snippets based on how relevant it is to the query. As part of this process, it scores all of your context – this is a very powerful metric.

Cosine similarity scores for retrieved embeddings

Average values of these metrics and how they correlate with user feedback (thumbs up/down)

By making this simple change, you can suddenly start analyzing how effectively the system is performing by examining the results it returns. For example, let's say a user asks something, and to respond we retrieve 10-20 snippets of context. We can now answer:

What was the cosine similarity of the text chunks?

What were the cohere relevance scores for those text chunks?

What are the averages?

Do those average values predict whether or not it's a thumbs up and thumbs down?

If these metrics don't align with the thumbs feedback, it raises a question about the relevance of this feedback metric

Big difference between “how did we do” versus “did we answer your question”

Important: Even if you use traditional retrieval (anything other than vector search), you should still run your retrieved context through a reranker and collect the scores.

Analyzing Production Performance

Once you have observability, you can begin analyzing production performance.

We can categorize this analysis into two main areas:

Topics: This refers to the content and context of the data, which can be represented by the way words are structured or the embeddings used in search queries. You can use topic modeling to better understand the types of responses your system handles.

E.g. People talking about their family, or their hobbies, etc.

Capabilities (Agent Tools/Functions): This pertains to the functional aspects of the queries, such as:

Direct conversation requests (e.g., “Remind me what we talked about when we discussed my neighbor's dogs barking all the time.”)

Time-sensitive queries (e.g., “Show me the latest X” or “Show me the most recent Y.”)

Metadata-specific inquiries (e.g., “What date was our last conversation?”), which might require specific filters or keyword matching that go beyond simple text embeddings.

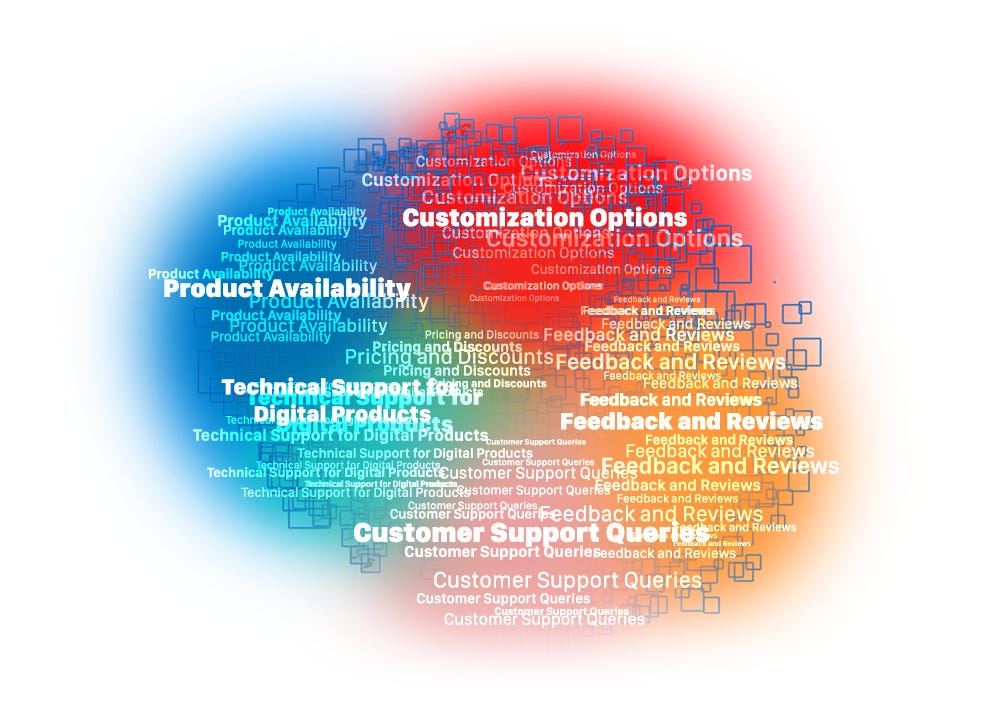

By applying clustering techniques to these topics and capabilities (I cover this in more depth in my previous article on K-Means clusterization), you can:

Group similar queries/questions together and categorize them by topic e.g. “Product availability questions” or capability e.g. “Requests to search previous conversations”.

Calculate the frequency and distribution of these groups.

Assess the average performance scores for each group.

Prioritizing Improvements

This data-driven approach allows us to prioritize system enhancements based on actual user needs and system performance. For instance:

If person-entity-retrieval commands a significant portion of query volume (say 60%) and shows high satisfaction rates (90% thumbs up) with minimal cosine distance, this area may not need further refinement.

Conversely, queries like "What date was our last conversation" might show poor results, indicating a limitation of our current functional capabilities. If such queries constitute a small fraction (e.g., 2%) of total volume, it might be more strategic to temporarily exclude these from the system’s capabilities (“I forget, honestly!” or “Do you think I'm some kind of calendar!?”), thus improving overall system performance.

Handling these exclusions gracefully significantly improves user experience.

When appropriate, Use humor and personality to your advantage instead of saying “I cannot answer this right now.”

By segmenting questions and evaluating both the frequency and quality of each segment, we can prioritize improvements based on the actual impact rather than attempting to achieve an elusive 100% accuracy. This strategic focus allows you to enhance the system's effectiveness where it matters most, directing efforts towards more frequently problematic areas which represent a higher percentage of user interactions.

Once we have all of this in place, we may dedicate some team resources into a handful of new tools that we found would bridge important gaps:

Maybe a new ability to search previous conversations

Or a new ability to do RAG on time-sensitive queries

Each of these distinct tools can be prioritized and invested in to improve over time. To do this, you have to prioritize and figure out how to spend the time and the resources to make these systems better.

TL;DR

Getting your RAG system from “sucks” to “good” isn't about magic solutions or trendy libraries. It's about adopting a methodical, data-driven approach. Start with specific metrics, create a robust evaluation dataset, and leverage tools like AutoRAG to fine-tune your pipeline.

Once live, implement strong observability practices to continuously analyze and improve performance. Cluster collected data into topics & capabilities to have a clear picture of how people are using your product and where it falls short. Prioritize enhancements based on real usage and remember, a touch of personality can go a long way in handling limitations.

By following these steps, you'll turn your RAG system into a reliable, high-performing tool that meets your users' needs and exceeds their expectations.

The Seven Major Challenges Faced by RAG Technology

- Missing Content: Solutions include data cleaning and prompt engineering to ensure the quality of input data and guide the model to answer questions more accurately.

- Unrecognized Top Ranking: This can be resolved by adjusting retrieval parameters and optimizing document ranking to ensure the most relevant information is presented to the user.

- Insufficient Background: Expanding the scope of processing and adjusting retrieval strategies is crucial to include a broader range of relevant information.

- Incorrect Formatting: This can be achieved by improving prompts, using output parsers, and Pydantic parsers, which help to obtain information in the format expected by the user.

- Incomplete Parts: Query transformation can resolve this issue, ensuring a comprehensive understanding of the question and providing a response.

- Unextracted Parts: Data cleaning, message compression, and LongContextReorder are effective strategies for addressing this challenge.

- Incorrect Specificity: This can be solved by more refined retrieval strategies such as Auto Merging Retriever, metadata replacement, and other techniques to further improve the precision of information retrieval.